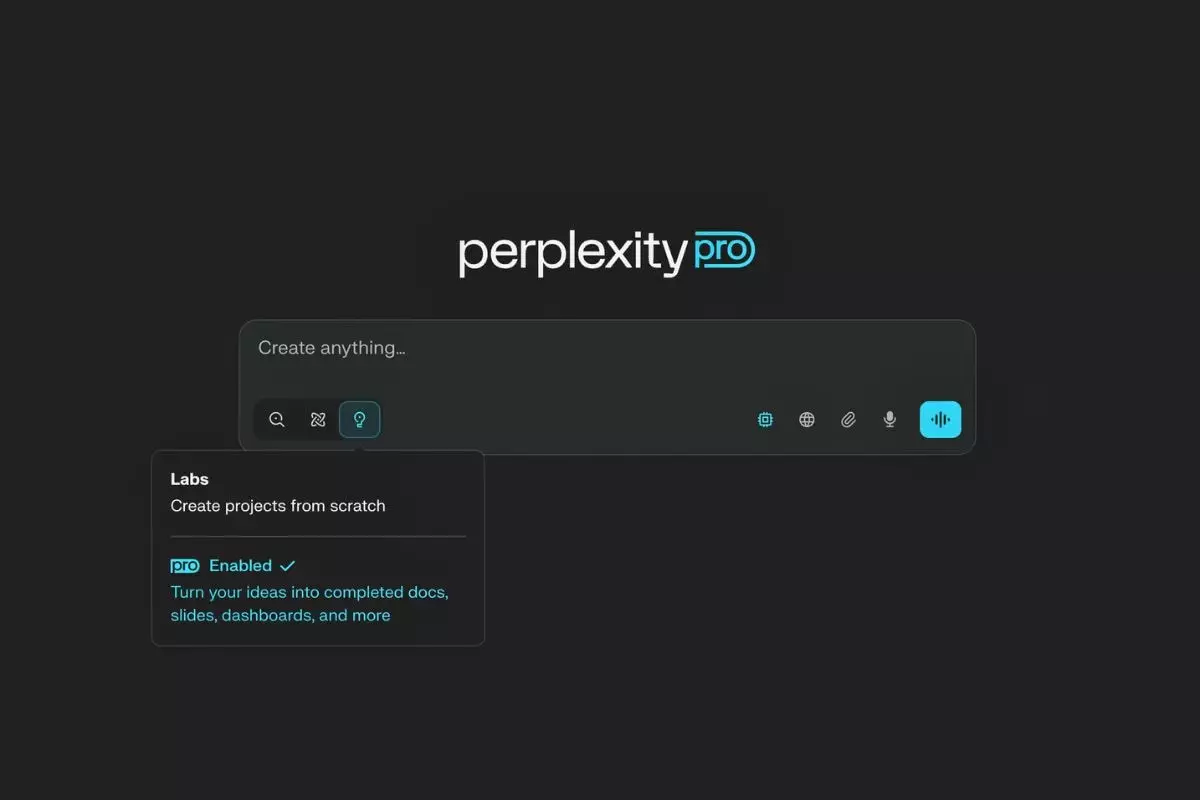

In the fast-evolving landscape of artificial intelligence, Perplexity Labs emerges as a compelling breakthrough that promises to redefine how we approach complex tasks. Launched on Thursday, this feature claims to transition users from basic query responses to crafting comprehensive projects like reports, spreadsheets, and even web applications with striking efficiency. While the intention behind this feature is noble and futuristic, it is essential to dissect its implications critically.

Perplexity Labs touts capabilities that leverage deep web browsing and code execution—technologies that, until now, remained somewhat elusive in their utility. The premise is that tasks once deemed intricate can now be tackled through a user-friendly interface, significantly lowering the barrier for those not versed in tech jargon. However, there exists a perilous dichotomy here: while the promise of democratizing technology is enticing, it brings forth ethical and operational concerns that cannot be overlooked.

Beyond Ordinary Searches: The Complexity Catalyst

What makes Perplexity Labs particularly striking is its potential to generate outputs beyond mere textual responses. The transformation of text prompts into actionable projects opens pathways for diverse fields, from financial analysis to creative storytelling. The blend of automation and intelligent design posits an intriguing question: Are we ready to trust AI systems to craft our strategic frameworks or personal narratives?

Although this feature is positioned as an enhanced version of Deep Research, its underlying technology remains shrouded in ambiguity. The lack of transparency on whether these tools embody agency or collaborate in multi-agent workflows raises valid concerns about accountability and reliability. The fear is that users might misunderstand AI’s capacity for problem-solving, potentially leading to erroneous outcomes in critical areas such as finance or healthcare.

Assets and Oversight: The Missing Links

In a bold move, Perplexity Labs introduces an “Assets” tab, housing all generated documents, code files, charts, and images—essentially functioning as a digital toolkit for users. However, this raises an important point regarding governance and oversight. As intelligent systems create assets autonomously, who bears the responsibility if these outputs veer into incorrect or misleading territories? Without stringent quality checks or a method for user oversight, the risk of disseminating unverified information grows exponentially.

Moreover, the potential to create basic dashboards or entire websites sounds like a boon—yet it might lead inexperienced users into the quagmire of overconfidence. The excitement around AI is palpable, but the real-world consequences of undermining human expertise in favor of automated convenience could result in long-term disadvantages.

The Double-Edged Sword of Self-Supervised Tasks

Perplexity Labs proposes that some tasks may take ten minutes to complete, giving rise to what the company describes as “self-supervised” tasks. This concept brings both innovation and concern to the forefront. While automation promises efficiency, it simultaneously raises the specter of diminished critical thinking and problem-solving skills among users. Are we nurturing a generation of thinkers, or are we inadvertently cultivating dependency on AI for creativity and analysis?

Moreover, the suggestion that users can see a chain-of-thought (CoT) is beneficial, yet the opacity regarding the ability to pause or redirect the AI process casts a shadow on user control. Engagement with AI should not become a passive experience; users must possess the ability to guide the trajectory of the AI-generated content actively.

Future Prospects: A Hesitant Embrace

As we tread further into this era of AI-powered creativity and productivity, the advent of Perplexity Labs encourages a dual-response: admiration for its capabilities, coupled with caution about its implications. This feature could be the key that unlocks an age of enhanced human-computer collaboration, but only if developed and utilized with a sense of responsibility and ethical foresight.

The central liberal ideal promotes the empowerment of individuals through technology, yet technology’s rapid evolution beckons us to reassess our readiness for autonomous AI in creative and strategic roles. The question remains: can we assure that AI serves as an aide, rather than an independent operator, in our quest for knowledge and creativity? The path forward will undoubtedly require careful navigation, balancing innovation with responsibility to secure a truly enlightened technological future.

Leave a Reply