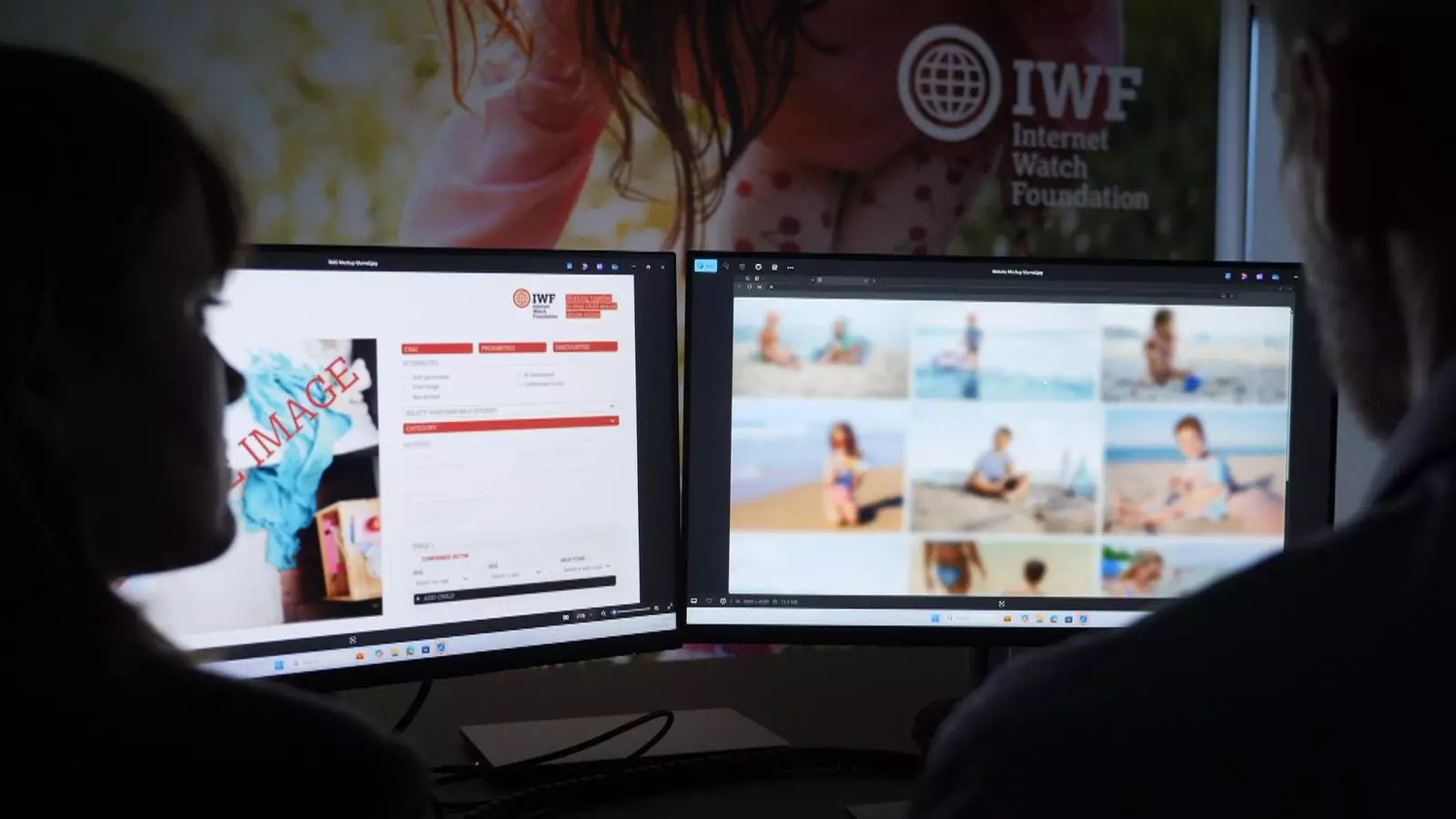

In an alarming response to the rise of child sexual abuse material (CSAM) generated by artificial intelligence, the government has announced new legislation aimed at making a significant dent in this gruesome trend. This move signifies an urgent call to action as society grapples with the chilling reality of AI’s capacity to create disturbingly realistic abuse imagery. As we delve into the implications of this legislation, we must consider not only its immediate objectives but also its broader ramifications on technology, safety, and the battle against child exploitation.

The newly announced measures are part of a more extensive plan to combat AI-enhanced child sexual abuses. Key provisions include:

1. **Criminalization of AI Tools**: The proposed law makes it illegal to possess, create, or distribute AI tools specifically designed to generate CSAM. Individuals caught violating this provision may face up to five years in prison.

2. **Ban on Paedophile Manuals**: The legislation also addresses the abhorrence of “paedophile manuals” that instruct individuals on utilizing AI for child exploitation. Possession of such literature may result in a three-year prison sentence.

3. **Targeting Online Predators**: New crimes will be established for those running websites that serve as platforms for sharing abusive content or grooming techniques. Convictions here could lead to sentences of up to ten years.

4. **Enhanced Powers for Law Enforcement**: Additional measures include empowering the UK Border Force to compel suspected online predators to unlock their electronic devices for inspection.

While the government’s commitment to addressing these issues is commendable, these provisions raise questions about their enforcement and the privacy rights of individuals.

The emergence of AI-generated CSAM has surfaced in response to ongoing trends in technology that, while innovative, also render society vulnerable to new forms of exploitation. Reports from organizations such as the NSPCC highlight the emotional toll on children who find themselves victims of these insidious practices. It is crucial to address the psychological impact alongside the legal provisions, as the fear instilled in children about these realistic fakery could hinder their willingness to report such abuse.

For instance, one distressing account from a 15-year-old girl showcases the complexity of feelings surrounding fake images that appear uncomfortably real. The fear of disbelief from family, coupled with the trauma of being targeted, underscores the psychological scars left on young victims. This aspect emphasizes that addressing the problem cannot solely rely on legislation; it also necessitates a cultural shift in how we handle discussions about consent, privacy, and the digital footprints we leave behind.

Jess Phillips, the safeguarding minister, declared the UK as a pioneer in legislating against AI abuse imagery. However, while the UK takes commendable steps to confront this issue, the international nature of the internet complicates enforcement. This necessitates a collaborative global approach to effectively mitigate the risks associated with AI-generated CSAM.

As the government embarks on this initiative, there is a pressing need for international cooperation to share intelligence, strategies, and technological advancements aimed at tackling this form of abuse. Without a synchronized global response, the effectiveness of the legislative measures could be significantly compromised, allowing predators to exploit jurisdictional loopholes.

Altering the legal framework to encompass AI-generated CSAM presents unique challenges. The enforcement of these laws may require substantial resources, training, and technological advancements to keep pace with rapidly evolving AI capabilities. Additionally, legal definitions of what constitutes AI-generated CSAM and how it can be differentiated from genuine content will be critical to ensuring that innocent parties are not unfairly targeted.

Moreover, balancing the urgency of combating child exploitation against the need for digital privacy and freedom of expression remains a complex and often fraught struggle. The introduction of AI tools in law enforcement raised ethical questions regarding surveillance and individual rights that cannot be overlooked in the quest for safety.

While the government’s legislative efforts mark a decisive step forward in addressing the urgent issue of AI-generated child sexual abuse material, the fight against this atrocity demands a more profound, multidimensional response. Empowering communities, raising awareness, fostering international cooperation, and most importantly, prioritizing the mental well-being of survivors are essential in constructing a robust strategy. Legislation alone cannot eradicate the menace posed by AI to the youngest and most vulnerable members of society; it requires a holistic commitment to safety, understanding, and compassion.

Leave a Reply